The pattern beyond the pixels

Once upon a time I started a new job. I didn’t do it lightly, in fact I fought against it for a long time, but I did take it in the end. The reasons I was reluctant were based on 50% ego and 50% being burnt by promises before. Ego-wise: I wanted the tasks and responsibilities, but the title felt like a step down and a potential trap. I had been promised the world before, only to realize that once in place – those promises melted away and meant nothing unless on paper.

The manager in question needed a right hand person to help take the current QA department to the next level – on several related but independent topics. They needed to skill up to stay relevant. They needed to continue pushing and modernizing ways of working, processes, and tools. But more importantly: a lot had already been done and was in place. And the organization really invested in it. There had been a big push in automation and tooling. They had added a lot of headcount and managed to get automation (and testing and requirement work) a part of the team’s normal delivery. I had, at this point in my career, been part of more than one failed automation initiative, so if there was one thing I really felt confident in – it was what not to do. I thought it sounded really nice to get to work with a company who didn’t try to save as much money as possible on testing so I decided to take it.

This will be a multi-post series telling that story. What happened, what I learned and that you can use to avoid making the same mistakes I did. But let’s get back to the story.

Vision vs. Reality

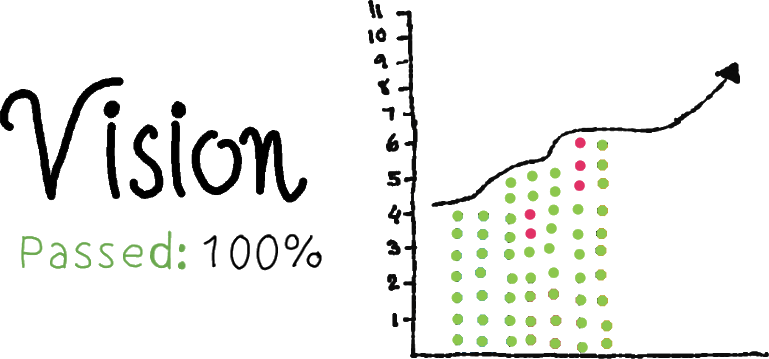

I stepped into that office with a vision. A dashboard of greens and a room full of people who would jump to fix problems that occured. A slow and steady climb of automation and a curious, problem-solving mindset. Like this:

To give some context before I continue, when I say automation in this context – we are not talking about unit- or integration tests done by developers as part of the pipeline and we are not talking about ”non-functional” tests like security and performance, which were different initiatives here. We are talking about functional tests, mostly end-to-end and through a graphical interface of some kind. There were API-level tests and there were tests that didn’t test whole flows – but you hopefully get a picture. These are tests that you can’t run before every check-in or as a part of the pipe-line. They simply take too long. For us, they were run nightly – and the responsibility to analyze and handle findings lay on the testers, who were integrated into the different teams. With that said – back to the story

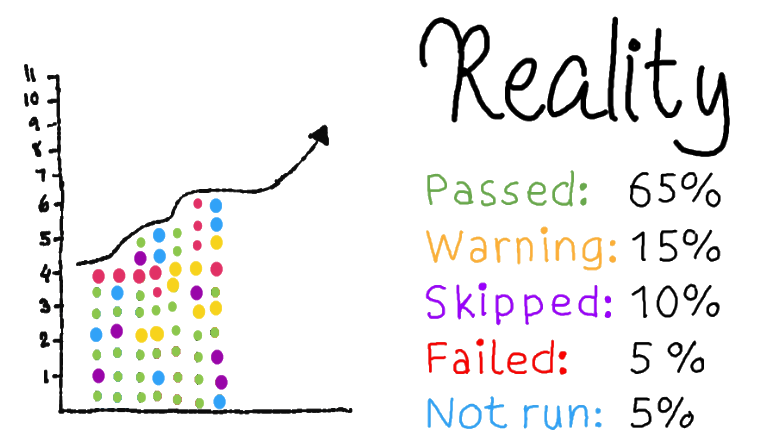

I would open my computer every morning, expecting something like the graph above. I would have a report in my mailbox, giving me loads of information about the current state. There were screen-shots and videos, things were traceable and nice. I had all the information I could expect given to me on a silver platter. The report, however, did not look like that. It looked more like this:

At first, I didn’t worry much about it. I saw people working, I heard people saying the right things. Management seemed happy. With time, I started getting a sense that something was off. I couldn’t put my finger on what it was, but my spider senses (call it gut feeling, experience, pattern matching skills) were going off like crazy. I didn’t see any improvement. I heard fragments of information that worried me. I could feel in my bones that there was a pattern here, and it became clear other people were not seeing it (or did but chose not to act).

I am not great at letting things go. I can accept a lot of things, but I have a deep need to understand to be able to move on. I felt like I would never be able to understand what was going on with only looking at the daily result, or without getting people to tell me more (or different) information. So I did two things: I decided I needed to find more data. A lot more data. And that I needed to start figuring out the right questions to ask.

Luckily, I could get my hands on all of the historical data – from the last five years. (which took up an insane amount of space, which we later fixed. But it was a treasure for me to dig in). And, after having started going through 4 months of mailbox and manually putting the results into a spreadsheet, a developer/tester who heard me complain and quickly understood what I was trying to do. In less than a day we had set up a process of extracting the data on a monthly basis (or on demand) and then we could get working on analysing and forming hypotheses.

At the same time, and getting better as I formed guesses from the data, I talked to people. I talked to developers, testers, scrum masters. product owners and management. I asked what they thought of the automation, what they would like to change, points of frustrations, about their daily work, ways of working. I asked open questions and very pointed questions. I asked about needs and wants and why certain choices had been made. Mostly – I tried to be friendly and curious above all, to avoid people feeling critiques and increase the chance of getting useful information.

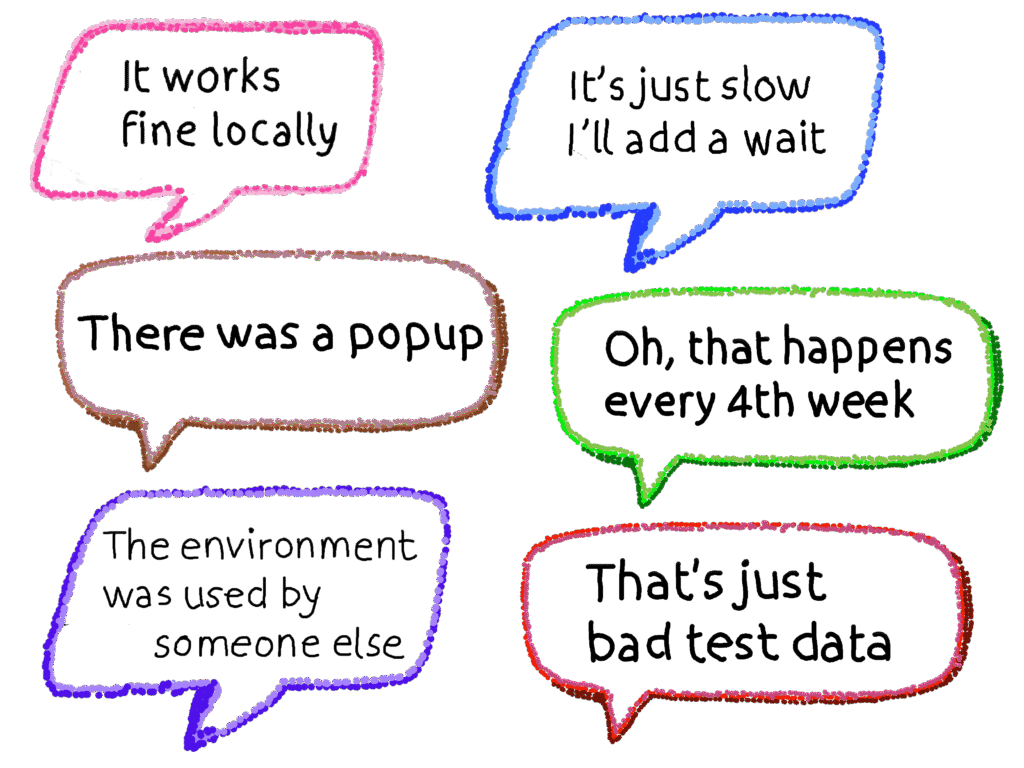

I got a lot of interesting responses. Some unexpected, some I should have seen coming a mile away. Let’s end this first part with some of them, and then in the coming parts I’ll dig into my learnings and how you can use them to get better results than I first managed.

Disclaimer: These are not formally quotes, they are my interpretations of long conversations. They might be polished but none are lies.

I found these very interesting. And frustration. And enlightening. Are there any that stick out to you? Any that give you some ideas of where we might have problems? Any that have enraged you in the past, or possibly ones you might even be using yourselves?

In the next part we’ll dig into my first big learning – as I embarked on the journey from having an idea of the underlying problems to trying to realize an actual change.